Time Series (optional)

This module will help you acquire knowledge of time series analysis and forecasting by guiding you towards online resources. In addition, it will give you tips on how you can apply your newly gained knowledge to the creative brief.

Learning objectives

After this module, you will be able to:

- Acquire knowledge of time series analysis and forecasting by consulting online resources

- Apply knowledge of time series analysis and forecasting to the creative brief

Introduction to time series

Until now, you mostly worked with so-called cross-sectional data - i.e., a set of data values observed at a fixed point in time or where time is of no significance. For example, ProPublica's COMPAS dataset. In Block D, you have the chance to work with a different type of data, namely time series.

What is time series data, analysis & forecasting?

Time series analysis is a specific way of analyzing a sequence of data points collected over an interval of time. In time series analysis, analysts record data points at consistent intervals over a set period of time rather than just recording the data points intermittently or randomly. However, this type of analysis is not merely the act of collecting data over time.

What sets time series data apart from other data is that the analysis can show how variables change over time. In other words, time is a crucial variable because it shows how the data adjusts over the course of the data points as well as the final results. It provides an additional source of information and a set order of dependencies between the data.

Time series analysis typically requires a large number of data points to ensure consistency and reliability. An extensive data set ensures you have a representative sample size and that analysis can cut through noisy data. It also ensures that any trends or patterns discovered are not outliers and can account for seasonal variance. Additionally, time series data can be used for forecasting—predicting future data based on historical data (Source).

Also, check out LaBarr's video, where he explains the difference between the two data types.

Video 1. What is Time Series Data by Aric LaBarr.

Forecasting applications

The applications of time series models are manifold. Below are five examples taken from various industries:

- Forecasting daily power demand to decide whether to build another power generation plant

- Forecasting weekly call volumes to schedule staff in a call center

- Forecasting weekly inventory requirements to stock inventory to meet demand

- Forecasting daily supply and demand to optimize fleet management and other aspects of the supply chain

- Forecasting daily infection rates to optimize disease control and outbreak programs

Toy datasets

You can find many toy datasets online, with Air Passengers undoubtedly being the most popular.

Feeling creative?! Clio-Infra offers some quirky time series datasets on their website, such as the number of sheep per capita ranging from the year 1500 to 2010.

Figure 1. Lamb of (oh my) God: disbelief at ‘alarmingly humanoid' restoration of Ghent altarpiece by the Guardian.

In 2010, the Netherlands Organisation for Scientific Research (NWO) awarded a subsidy to the Clio Infra project, of which Jan Luiten van Zanden was the main applicant and which is hosted by the International Institute of Social History (IISH). Clio Infra has set up a number of interconnected databases containing worldwide data on social, economic, and institutional indicators for the past five centuries, with special attention to the past 200 years. These indicators allow research into long-term development of worldwide economic growth and inequality (Source).

Notation

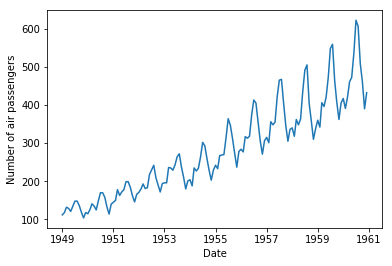

Let's start to inspect the Air Passengers dataset. When we visualize it in a line graph, we get the following:

Figure 2. Visualization of the Air Passengers time series dataset.

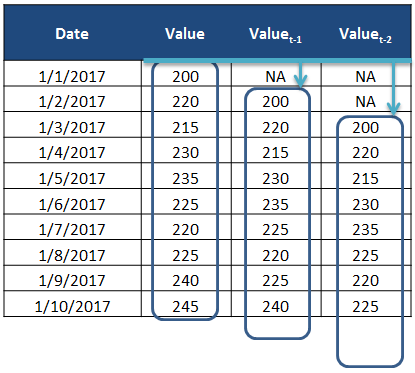

Before we dive deeper into time series analysis and forecasting, we need to take a look at some standard notation:

The current time is defined as t, an observation at the current time is defined as obs(t). We are often interested in the observations made at prior times, called lag times or lags. Times in the past are negative relative to the current time. For example the previous time is t-1 and the time before that is t-2. The observations at these times are obs(t-1) and obs(t-2) respectively. Times in the future are what we are interested in forecasting and are positive relative to the current time. For example the next time is t+1 and the time after that is t+2. The observations at these times are obs(t+1) and obs(t+2) respectively. For simplicity, we often drop the obs(t) notation and use t+1 instead and assume we are talking about observations at times rather than the time indexes themselves. Additionally, we can refer to an observation at a lag by shorthand such as a lag of 10 or lag=10 which would be the same as t-10.

To summarize:

t-n: A prior or lag time (e.g. t-1 for the previous time). t: A current time and point of reference. t+n: A future or forecast time (e.g. t+1 for the next time).

(Source).

Figure 3. Lags in a data table.

Components

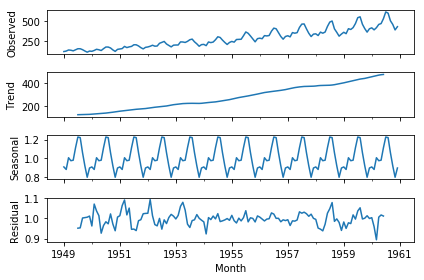

To understand a forecasting task, it is useful to think of a time series as a combination systematic (i.e., level, trend, and seasonality), and non-systematic (i.e., noise) components. Additionally, all time series have a level, and noise component. The trend and seasonality components are optional.

Figure 4. High-level decomposition of a time series.

- Level: The average value in the series

- Trend: The increasing or decreasing value in the series

- Seasonality: The repeating short-term cycle in the series

- Noise: The random variation in the series

Figure 5. Decomposition of the Air Passengers dataset.

For a more in-depth explanation on decomposing time series (e.g., additive vs. multiplicative model), see video below:

Video 2. What is Time Series Decomposition by Aric LaBarr.

Algorithms

There are many ways to model a time series to make predictions. For example:

- moving average

- exponential smoothing

- ARIMA

Want to go beyond the basics, and apply advanced models? Then, consider looking into algorithms such as SARIMAX, VARMAX, CNN, and (autoregressive) LSTM.

Things to consider…

- How much data do you have available and are you able to gather it all together? More data is often more helpful, offering greater opportunity for exploratory data analysis, model testing and tuning, and model fidelity.

For example, ARIMA models need a minimum of 30 data points, while deep learning based models generally need significantly more data points.

- What is the time horizon of predictions that is required? Short, medium or long term? Shorter time horizons are often easier to predict with higher confidence.

There are many ways to forecast time series data. Some algorithms perform better with shorter time horizons (e.g., ARIMA), while others perform better with larger time horizons (e.g., Prophet). Furthermore, as mentioned earlier, shorter time horizons are generally easier to predict with high confidence (i.e., short time horizon - lower degree of uncertainty, long time horizon - higher degree of uncertainty), therefore they often have smaller prediction intervals (i.e., blue bands in the picture below).

Figure 6. Visualization of the prediction intervals of a time series forecast.

For a quick tutorial on applying the ARIMA model to your time series dataset, check the article ARIMA model in Python.

Need more in-depth information, see the Section Blended Learning, which lists several (extensive) online courses on the topic of time series analysis and/or forecasting.

-

Can forecasts be updated frequently over time or must they be made once and remain static? Updating forecasts as new information becomes available often results in more accurate predictions.

-

At what temporal frequency are forecasts required? Often forecasts can be made at a lower or higher frequencies, allowing you to harness down-sampling, and up-sampling of data, which in turn can offer benefits while modelling.

Time series data often requires cleaning, scaling, and even transformation. For example:

-

Frequency. Perhaps data is provided at a frequency that is too high to model or is unevenly spaced through time requiring resampling for use in some models.

-

Outliers. Perhaps there are corrupt or extreme outlier values that need to be identified and handled.

-

Missing. Perhaps there are gaps or missing data that need to be interpolated or imputed.

It is very likely that you will encounter missing values in a dataset (e.g., weather station data). In order to fit your time series data, and subsequently make an accurate prediction with your model, you need to replace the missing data values. There are various methods available that will interpolate or impute your data.

Need more information about how to sensibly handle missing data, check out the following article and/or DataCamp courses:

- Manipulating Time Series Data in Python

- Dealing with Missing Data in Python

- How to Fill Missing Data with Pandas

Lastly, some time series methods require the data to meet certain statistical assumptions. For example, ARIMA requires your series to be stationary, while Prophet does not have this specific requirement.

A stationary process has the property that the mean, variance and autocorrelation structure do not change over time. Stationarity can be defined in precise mathematical terms, but for our purpose we mean a flat looking series, without trend, constant variance over time, a constant autocorrelation structure over time and no periodic fluctuations (seasonality) (Source).

So before you start to model your data, look into the (model-specific) statistical assumptions.

(Source)

Blended learning

There are many online resources available on the topic of time series analysis, and/or forecasting. Please, check the following resources:

Python:

- Manipulating Time Series Data in Python

- Time Series Analysis in Python

- Visualizing Time Series Data in Python

- ARIMA Models in Python

- Machine Learning for Time Series Data in Python

R:

- Forecasting: Principles and Practice

- Manipulating Time Series Data with xts and zoo in R

- Time Series Analysis in R

- ARIMA Models in R

- Forecasting in R

- Visualizing Time Series Data in R

Power BI:

Theory: