Data Augmentation

A key concern when training a convolutional neural network is overfitting. A model, so tuned to the specifics of the training data that it is unable to generalize to new data.

Two techniques to prevent overfitting when building a CNN are:

1) Dropout regularization - Randomly removing units from the neural network during a training gradient step. A technique you know well by now, in case not, take a step back and go through Image Classification Codecademy course regularization chapter.

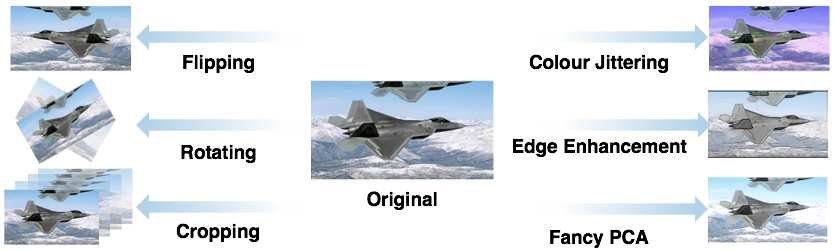

2) Data augmentation - artificially boosting the diversity and number of training samples by performing random transformations to existing images to create a set of new variants.

Also, Data augmentation is especially useful when the original training data set is relatively small. Remember, Data augmentation should only be applied to the training set.

watch this video to get an overview of augmentation techniques:

Note: Overfitting is more of a concern when working with smaller training data sets. When working with big data sets (e.g., millions of images), applying dropout is unnecessary, and the value of data augmentation is also diminished.

Now, with the understanding of augmentation concepts for the rest of the day you will be researching how to implement data augmentation techniques, followed by implementing them to your creative brief project.

Luckily, our friend tensorflow has all the complicated image processing details wrapped in functions for us to use. Follow this tensorflow article as your primary research source and use it for your creative brief data sets. Try to accommodate as much as possible techniques and not only resizing or rotating features as example.

[Optional] Extra reading material on Fancy PCA Color Augmentation, it will be great to incorporate these as well.